The use of social media monitoring products by police raises serious concerns a threat to First Amendment rights.

By Rachel Levinson-Waldman, J.D.

Deputy Director, Liberty & National Security

Brennan Center for Justice

By Mary Pat Dwyer, J.D.

Fellow, Liberty & National Security

Brennan Center for Justice

The Brennan Center released documents Wednesday from the Los Angeles Police Department shedding light on the services being marketed by social media monitoring firm Voyager Labs to law enforcement. The records, obtained through a freedom of information lawsuit, illuminate Voyager’s own services and offer a broader window into the typically secretive industry of social media monitoring.

The documents raise serious concerns about how the use of such products by police threatens First Amendment rights and has a disproportionate impact on Muslims and other marginalized groups, as well as whether companies like Facebook and Twitter are living up to their promises to keep surveillance companies from misusing data in ways that violate the platforms’ terms of service.

In its sales pitches, Voyager declares that it can assess the strength of people’s ideological beliefs and the level of “passion” they feel by looking at their social media posts, their online friends, and even people with whom they’re not directly connected. The company also claims that it can use social media and artificial intelligence tools to accurately assess the risk to public safety posed by a particular individual.

To the contrary, however, the posts and activities of those who commit violent acts resemble the activity of countless other users who never engage in violence. Using law enforcement resources to target all social media users who share interests with individuals who have engaged in violence would result in a crushing volume of information and would yield thousands or even millions of false positives. And investigations and arrests based on AI-driven conclusions are likely to be deployed disproportionately against activists and communities of color.

Two case studies that Voyager sent the LAPD illustrate the company’s troubling promises.

The first examines the social media activity of Adam Alsahli, who attacked the naval air station in Corpus Christi, Texas, in May 2020. After the attack, Voyager analyzed Alsahli’s “activities and interactions” and highlighted social media activity it claimed could aid in an investigation of the incident. The company also suggested that Alsahli’s behavior on social media showcased the types of activity that would invite “proactive vetting and risk assessment.”

According to Voyager’s own descriptions, however, its analysis seems to rely heavily on ordinary religious themes and references to Alsahli’s Arab heritage and language:

While Alsahli himself may have been motivated by Islamic extremism, flagging this type of content to identify purported threats would do little more than target millions of social media users who are Muslim or speak Arabic, subjecting them to discrimination online that mirrors their treatment offline. Voyager’s claims that its tools offer “immediate and complete translation” of Arabic and “100 other languages” are suspect as well: natural language processing tools have widely varying accuracy rates across languages and Arabic has proven particularly challenging for automated tools. And even literal translation of social media content often misses key cultural context.

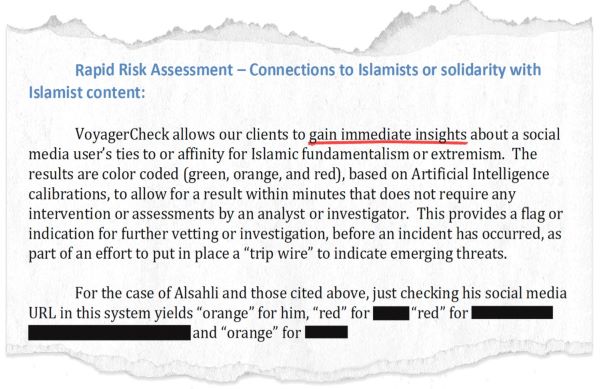

Voyager’s claims about its AI tools are similarly tenuous. The service appears to be focusing on events like terrorism or mass violence, but there is no evidence that language and images used on social media are predictive of those types of rare events, whether analyzed by human reviewers or automated tools. Of even more concern, Voyager says it uses AI tools to produce a color-coded risk score signifying the user’s “ties to or affinity for Islamic fundamentalism or extremism,” with no human review involved.

While human involvement would hardly solve the myriad underlying issues, Voyager offers no proof for its assertion that an automated system could, with any accuracy, gauge “within minutes” whether someone subscribes to a particular ideology. Indeed, this approach is reminiscent of the repeatedly debunked assertion that there is a common or identifiable “path” to radicalization.

In fact, Voyager’s own language exposes a fatal weakness in its approach: fundamentalism and extremism are not illegal, and an “affinity” for them is not evidence of planning for violence. Even an accurate categorization of individuals with “ties” to “extreme” ideologies, whether underpinned by Islamic or any other beliefs, would provide no actionable information to law enforcement.

A second case study on Muslim Brotherhood activist Bahgat Saber, who urged his social media followers to infect officials at Egyptian consulates and embassies with Covid-19, reveals both the astonishing amounts of information available through social media and the tenuous connections that Voyager considers evidence of potential extremism. For this study, Voyager began by extracting information from the public profiles of Saber’s nearly 4,000 Facebook friends. Without any suspicion that these individuals had done anything illegal, Voyager pulled their information into a searchable, monitorable dataset:

When Voyager concluded that none of Saber’s Facebook friends could be tagged as “extremist threat[s],” it went a step further, analyzing the connections of Saber’s friends — people to whom he had no direct connection — and suggesting that those individuals’ ideologies were indicative of Saber’s.

The problems with this logical leap are obvious: social media contacts range from distant relatives to classmates and acquaintances from years past to people encountered at a single event. In other words, these contacts may include individuals with whom a person has not spoken in years or only a handful of times. The likelihood of knowing their ideologies, much less sharing them, is far from certain.

The emphasis in Voyager’s materials on Muslim users’ activity also suggests serious bias and a disregard for data indicating the far right presents the greatest threat of extremism in the United States. For example, while some Voyager materials describe its ability to assess ideological strength in general terms, others, like the Alsahli case study, seem to suggest these tools are targeted to Muslim content. And it is telling that both of Voyager’s test cases analyze Muslim men.

Finally, the fact that Voyager — like other companies such as Media Sonar and Dataminr, which we’ve previously reported on — is able to harvest social media information years after the major platforms barred the use of their sites for surveillance raises the question of whether the platforms are doing enough to identify and block these companies.

We obtained these documents through our public records litigation against the LAPD, which we pursued (with the assistance of law firm Davis Wright Tremaine) as part of our ongoing effort to increase transparency of and accountability for law enforcement’s monitoring of individuals and groups on social media. It’s time for change throughout the ecosystem of social media surveillance, from the companies developing these tools to the platforms failing to aggressively police them to the law enforcement agencies seeking them out and purchasing them.

Originally published by the Brennan Center for Justice, 11.17.2021, under the terms of a Creative Commons Attribution-No Derivs-NonCommercial license.