The modern era of surveillance.

By Peter Andrey Smith

Science Journalist and Senior Contributor

Undark Magazine

William C. Thompson does not ordinarily hunt for bugs, and his scrutiny of a type of computational algorithm that analyze DNA started out innocently enough. Investigators used software in a case from Southern California where, in March of 2016, police had pulled over a man named Alejandro Sandoval. The traffic stop turned into a criminal investigation, which ultimately hinged on what amounted to a single cell’s worth of evidence.

Thompson, a professor emeritus of criminology, law, and society at the University of California Irvine, published a paper about the case in 2023, which ultimately resonated with a much larger issue: the bitter, public battle over genetic information, artificial intelligence, and the societal applications of computational tools that leverage large quantities of data.

It was just one flashpoint in the modern era of surveillance — a discipline that seems to have leapfrogged from old-school wire taps to algorithmic panopticons while the public was paying little attention. Earlier this year, for example, scientists demonstrated it both possible and plausible to pluck human DNA samples out of an air-conditioning unit. Even if a criminal entered a room for a short period of time, wearing gloves, cellphone wrapped in tinfoil in an attempt to avoid detection, and bleached any blood off the floor, A/C units passively collect traces of DNA, which could then be gathered, isolated, and used to identify a perp. The same is also true, of course, of any individual — even you. The state of modern surveillance, then, should concern all of us.

Passive means of listening and looking, meanwhile, are ubiquitous: In some jurisdictions, the sound of a gunshot can trigger a computationally generated alert, geolocating the sound and dispatching authorities. And all police departments in U.S. cities with populations of 1 million or more deploy automatic license plate recognition cameras. Your vehicle’s characteristics — derived from those same cameras — are likely being logged by a company that claims it was involved in solving 10 percent of all crimes in the U.S. If the camera didn’t capture every digit or letter on your license plate, no worries: An algorithm called DeepPlate will decipher blurry images to generate leads in the investigation of a crime.

Even seemingly innocuous infractions can nudge citizens into the dragnet of modern computational surveillance. In a quid pro quo practiced in at least one jurisdiction petty misdemeanors, like walking your dog off leash, are frequently handled as a barter: Give us a sample of your DNA, and we’ll drop the changes. That could put your genome into a national database that, at some point down the line, could potentially link one of your relatives — even those not yet born — to future crimes. Meanwhile, if you end up behind bars, several systems will not only monitor your calls and contacts, but they might also use software to “listen” for problematic language.

None of these examples are hypothetical. These technologies are in place, operational, and expanding in jurisdictions across the globe. But the typical questions they tend to animate — questions about privacy and consent, and even the existential worry over the creep of artificial intelligence into government surveillance — belie some more basic questions that many experts say have been largely overlooked: Do these systems actually work?

A growing number of critics argue that they don’t, or at least that there is little in the way of independent validation that they do. Some of these technologies, they say, lack a proven record of reliability, transparency, and robustness, at least in their current iterations, and the “answers” these tools provide can’t be explained. Their magic, whatever that might be, unfolds inside a metaphoric black box of code. As such, the accuracy of any output cannot be subjected to the scrutiny typical of scientific publication and peer review.

In the traditional lingo of software engineers, these systems would be called “brittle.”

Arguably, the same might be said of the case of Alejandro Sandoval. During his traffic stop, police claimed that they turned up drugs — specifically, about 80 grams of methamphetamine, in two plastic baggies, which reportedly slipped out from under his armpits. But his defense floated another possibility: the drugs — and the DNA contained on their exterior — belonged to someone else.

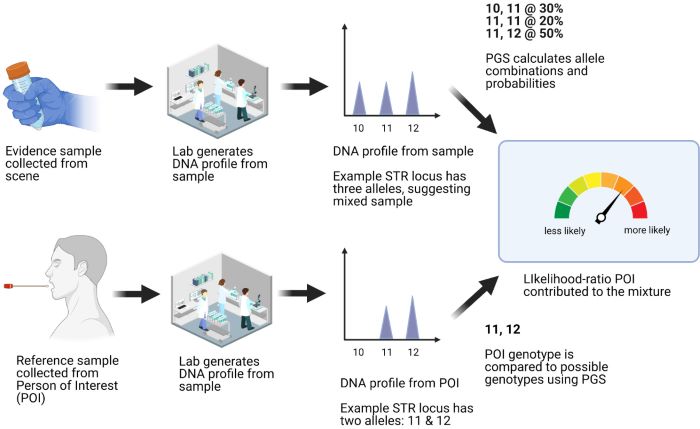

As the case ground its way toward trial, Sandoval’s attorneys requested DNA testing. The results suggested one of the bags contained a mixture of multiple people’s DNA. The main contributor appeared to be female, which, assuming there were only two contributors, left only a small quantity of one other person’s DNA, amounting to a mere 6.9 picograms of genetic stuff — about the amount found inside a human cell. Because the quantity of DNA for analysis was so low, and because it appeared to be a mixture contributed by more than one person, analysts deemed it “not suitable” for manual comparison, prompting examiners to feed the data into a computer program.

The goal was to make use of another modern surveillance-and-investigatory tool called probabilistic genotyping — a sort of algorithmic comb that straightens out a tangle of uncertain genetic data. According to data presented at a March 2024 public workshop at the National Academies of Sciences, Engineering, and Medicine, the technique is now in use at more than 100 crime labs nationwide, and proponents champion these algorithmic solutions as a way to get the job done when no one else can. In Sandoval’s case, the output would provide a measure of likelihood that DNA found on the baggies belonged to him.

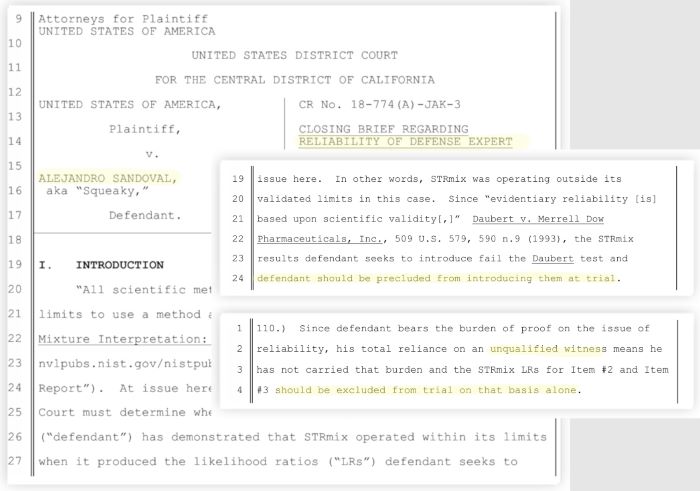

The reason that prosecutors initially called in Thompson, the law professor, to discuss the statistical output was because the results suggested prosecutors had the wrong suspect. The results from two different software programs suggested Sandoval’s DNA was not part of the mixture, suggesting it was more probable that he had not contributed to the evidence swabbed off the bags.

Something, it seemed, did not add up.

The more Thompson looked at the evidence, the more he began to think that getting accurate results was “probably wishful thinking.” While the dueling software programs both suggested Sandoval was not a likely contributor to the mixture, one program, TrueAllele, did so much more conclusively. According to TrueAllele, the case’s DNA testing was up to 16.7 million times more likely to have yielded the given results if Sandoval’s DNA was not included in the mixture. The other program, STRmix, suggested it was only between 5 and 24 times more likely.

Proponents of the technology would later question his analysis, and his objectives; in a letter published in response to critics, however, Thompson maintained his pursuit was in earnest.

“When I learned that two programs for probabilistic genotyping had produced widely different results for the same case,” he wrote in the Journal of Forensic Sciences. “I wanted to understand how that could have happened.”

In questioning how much statistical certainty could derive from so little hard evidence, Thompson threw a light into a dark corner of the surveillance state where a whole suite of data-collection tools purport to solve crimes and produce extraordinary claims that are sometimes exceedingly difficult to verify.

“How do we do a critical assessment of those claims?” Thompson said in a recent interview. “How do we assure that the claims are responsible? That they’re not overselling or overstating the strength of their conclusions? And all I’m saying is we haven’t.”

And therein lies the dispute.

Step back from the particulars of the Sandoval case, and you’ll see that these questions pertain to other algorithms that make predictions and generate statistical probabilities. These systems represent an entire class of tools — and reflect on broader patterns for how they’re being uncritically deployed. As Daphne Martschenko, a researcher at the Stanford Center for Biomedical Ethics, told me: “We increasingly live in a world where more and more data is collected on us, oftentimes without our full knowledge, or without our complete consent.” One small step, in other words, in the giant leap towards the end of privacy as we know it.

Whether or not you care — and you might not care if you think of yourself as a law-abiding citizen — we don’t typically take these routine violations of autonomy and the loss of privacy seriously. The normalization of video surveillance and facial recognition technologies, including ubiquitous consumer products like doorbell cams or the auto-tagging features that detects your child’s face at summer camp, has led to complacency; in turn, several scholars argued in a recent law review paper, “we are being programmed not to worry about forms of surveillance that once struck many of us as creepy, ambiguous threats.”

Take DNA, now the gold standard for forensic investigations; its applications in the aid of law enforcement might have creeped you out just a few decades ago. While the O.J. Simpson trial put the DNA wars front and center in the mid-90s, contemporary uses of forensic DNA technologies are exponentially more powerful yet get less scrutiny. Take investigative genetic genealogy, which compares DNA profiles lifted from crime scenes with publicly available databases, including those provided by direct-to-consumer testing companies. In a phone interview, Sarah Chu, the director of policy and reform at the Perlmutter Center for Legal Justice at Yeshiva University Cardozo School of Law, and moderator of the recent National Academy of Sciences workshop that discussed the issue, told me that these applications have not sparked a similar national conversation about “what would be allowed, what’s not allowed, what would invade privacy, what limitations could be put on technology to make sure that they could both address public safety as well as have the lightest footprint possible.”

“Once DNA became ubiquitous in the system,” she added, “I think a lot of those sensitivities have fallen away. And unfortunately, they’ve fallen away at a time when technology has advanced so we can take more information than ever from people.”

But it’s more than that: Data-collection systems perpetuate a demand for more data, and we’ve grown accustomed to having data collected for one reason being used for another application entirely. “There are no restrictions that, say, when you have this data, you can’t use it for anything else,” Chu said. The availability of tools that deconvolute mixtures of DNA further encourage law enforcement agencies to gather more, routinely swabbing evidence that is often handled by more than one person, such as guns and drugs. In widening the net, the agencies capture more data, including low-level mixtures that not only create uncertainty in the scientific processes but also stretches the interpretive capacity. Which ends up, Chu said, with “borderline reliable results on one end, and, on the other end, there’s this incentive to swab as many things as possible, because you’re going to be able to get an answer with probabilistic genotyping.”

We are living in a “brave new world of prediction” but like any prediction there’s still the age-old problem of falsifiability. If the ground truth cannot be known, then it’s impossible to assess accuracy. These unknowns are further complicated when the analyses take place inside the prototypical black box. “We live in a capitalist society where industry is driven by profit and financial incentives, and revealing the black box in terms of the software that they’re using is not necessarily in their best interests,” Martschenko said. “And, of course, in the case of the use of these genetic technologies by law enforcement, there are real implications for real people in the world.”

The government’s case against Sandoval seemed at once routine and extraordinary. Thompson, the UC Irvine professor, said he thought it merited attention for several reasons. For one, the prosecuting attorney attempted to exclude certain DNA results, marking what is likely the first time the government tried to toss out probabilistic genotyping. The results, after all, were unfavorable to the prosecution: They suggested the baggies of meth had no real trace of the suspect’s DNA, implying Sandoval had not touched the bags and was possibly innocent. But the dispute never made it to trial. In July of 2023, Sandoval pleaded guilty to drug charges. He was sentenced to 90 days in prison, plus probation. If statistics lent an air of authority to one California man’s innocence, then the output of the legal system nonetheless rendered him guilty.

Thompson did not name the case directly in his original 2023 paper. But what he claimed was a good-faith effort to examine two software programs incited an acrimonious, back-and-forth with its makers. Mark Perlin, the CEO and chief scientific officer of the Pittsburgh company that makes TrueAllele, coauthored a lengthy rebuttal on a preprint repository, calling Thompson’s work “unbalanced, inaccurate, and scientifically flawed.” By Perlin’s count, the paper contained nearly two dozen “conceptual errors” and “120 mistaken or otherwise problematic assertions.” Thompson, in turn, posted a response on the same preprint server, arguing that, among other things, Perlin made some demonstrably false accusations, such as the claim that his original paper named Sandoval. (Perlin identified the case by name in his rebuttal, but, in a subsequent letter posted to his company’s website, he claimed Thompson had first named names in following through on an offer in his acknowledgements to make reports about Sandoval’s case “available, on request.”)

The journal that published Thompson’s original paper accepted a much shorter letter from Perlin and co-authors, as well as one from other researchers. Thompson responded to both of them. As he saw it, such cutting critiques came with the territory. The firms marketing STRmix and TrueAllele have held their proprietary source code close, turning down requests or placing restrictions on research licenses that would potentially allow independent scientists to test the software. (Perlin disputes these accusations, saying his company does license the software “to academic forensic science programs.” But he does not provide it to researchers he deemed “advocates” who, in his view, were more interested in “breaking things instead of testing them.”) Computer science may be rooted, like academia, in openness, but Thompson saw firsthand how companies marketing these tools responded. “They wrote a letter that accused me of all kinds of scientific misconduct and claimed I was using their proprietary data and made all kinds of wild allegations, hoping to, like, get the article suppressed,” Thompson said. “It didn’t succeed.”

One accusation Perlin and others leveled in their preprint was that Thompson was a lawyer in a lab coat. “Thompson,” they wrote, “writes like a lawyer masquerading as a scientist.” Thompson said it’s true that he’s worked as an attorney, and, indeed, in having previously litigated defamation cases, he did not believe his opponents stood a chance of winning. Although he remained unbowed, Thompson worried that these tactics might intimidate others from testing the technology’s limitations.

For his part, Perlin saw himself as a champion of the scientific truth. In a written response to questions from Undark, Perlin said, “published validation studies cover a wide range of data and conditions.” In an interview, he bristled at suggestions from government groups that probabilistic genotyping software requires more independent review. “The scientists respect the data, the technologies, the software, the tools used in the laboratory, use all the data and try to give unbiased, objective, accurate answers based on data,” he said. “And these advisory groups and many academics don’t.” Perlin went on to reiterate his chief objection: “They’re not functioning like scientists; they’re functioning like advocates.”

United States of America vs. Sandoval was not the first time the two most common tools already in use, STRmix and TrueAllele, generated different results with the same raw data. (A 2024 study suggested, at least in certain conditions, the dueling algorithms “converged on the same result >90% of the time.”) It’s almost like having two calculators that don’t give the same answer. To Thompson, that merited scrutiny. Perlin saw no point to the paper.

Regulators, however, are paying attention. Discussions about these technologies were focal at hearings before the Senate Judiciary Committee in January, and in a separate advisory committee on evidence rules that met in Washington, D.C. Researchers have also questioned the use of AI technologies in criminal legal cases. Andrea Roth, a professor at the University of California Berkeley School of Law, has suggested that the threshold for accepting machine-generated evidence might be if two machines arrive at the same conclusion. She has also suggested that existing rules that govern what are known as prior witness statements might be a model for challenging the credibility of algorithms, thus creating a sort of mandate to reveal any prior inconsistencies or known errors. For now, though, the law is unsettled, and the system appears wholly unprepared for a future that has already come to pass.

If nothing else, the case against Sandoval suggests that there are limits, and that people — police and prosecutors and defense attorneys — are testing them. Perlin did not think this was a case illustrating the limits of his software, but he acknowledged probabilistic genotyping has limitations. “It’s like you look through a telescope; at what point does it stop working? Well, there’s no hard number. At some point, you just don’t see stars anymore. They’re too far away.”

The publication of one defendant’s genetic profile was perceived as both a violation of privacy and a public service. And it was concerning for more reasons than one. As Thompson said: “This has been a common theme that we’ve seen with every new development of the technology is that there’s a lot of enthusiasm, it’s good for society generally, but we don’t have good mechanisms to keep people from taking it too far. And we have lots of incentives for people to do that and not a whole lot of controls against it.”

The two sides disagreed about who was seeing something where there was nothing.

Perhaps we need to fundamentally reevaluate our perception about the computational tools that surveil people. In “AI, Algorithms, and Awful Humans,” privacy scholars recently made the case that these tools decide differently than you might think. Contrary to popular belief, it’s unreasonable to treat them as cold, calculating machines, unshackled from human bias and discrimination. The authors also note: “Making decisions about humans involves special emotional and moral considerations that algorithms are not yet prepared to make — and might never be able to make.”

Routine surveillance — be it geolocating the source of a gunshot, using facial recognition tools, or isolating a mixture of DNA off two plastic baggies of meth — go hand-in-hand with control. Algorithms and the predictions they make reinforce patterns, solidifying bias and inequality. Chu and others have called this the “surveillance load,” which disproportionately falls on over-policed communities. Others too contend the system can’t be reformed by rewriting the code or refactoring algorithms to fix the metaphoric broken calculator; the data are intrinsically flawed.

There are no comprehensive datasets on how often the results of computational analyses misalign with a decision of guilt or innocence, or how often automated forms of suspicion turn up no evidence. Alleged serial killers have been caught because a discarded slice of pizza had their DNA on it; there have also been misguided investigations involving genotyping. The analysis of minute patterns on bullets and latent fingerprints increasingly leverages computational tools. There are other cases where we just don’t know about. A conviction does not prove any emerging technique is methodologically sound science.

Even in a best-case scenario, with published internal validation and publicly available benchmarks, it leaves open another question. As Martschenko put it: “How many people ended up becoming a part of the process when at the end of the day, you know, it had nothing to do with them?” Mass surveillance almost certainly ensnares innocent people, so is the loss of privacy the price we must pay for whatever these systems accomplish? Some kind of systematic evaluation of the glitches and bugs might help. And that might ultimately answer, Martschenko said, the question of whether “the juice is worth the squeeze.”

When it comes to Sandoval, whether you believe an innocent man got sent to prison, or two algorithms wrongfully supported a hypothesis that suggested a guilty suspect did not touch evidence associated with a crime, the two stories do not align. There is only one truth. Something went wrong. The real problem is we may never know the scope of the miscarriages of justice that almost certainly exist in society.

Perlin maintains that science doesn’t take sides. His results were correct, and moreover, he said, there’s a flip side to not using computational tools to solve crimes. He argues that the government-sanctioned methods for DNA interpretation are uninformative. “A lot of the foundations of this field that are accepted are just based on sand.” Indeed, he said, results that falsely label DNA evidence as inconclusive means that potentially useful information gets tossed. Sometimes this works against people trying to prove their innocence, but it also means the guilty go free. “The government makes mistakes by not getting the information that could have captured a serial killer or a child molester or somebody who’s harming people. They don’t want to revisit those either.”

In the clinical research community, federal agencies subject consumer products to testing. In medicine, we have the Food and Drug Administration and the Clinical Laboratory Improvement Amendments, which set national standards. We may know how and why so-called self-driving cars kill people because investigative reports examine the errors, and an agency is tasked with investigation, but how often are these other technologies messing up people’s lives?

The modern surveillance system is brittle, yet there is no single agency to provide oversight when it cracks. Indeed, if we only know when surveillance systems actually work — for instance, when the tech proves someone’s guilt beyond a reasonable doubt — a wide spectrum of scholars and activists argue that no one knows when something breaks, and so there’s no accountability. “After a plane crash, they’ll send in an expert team to try to document all aspects of what went wrong and how to fix it,” Thompson said. “No such body exists for miscarriages of justice.”

And what will happen if and when such technologies actually work? This question is particularly pressing if we cannot keep our data private. Municipalities sift through wastewater. Private firms record calls to collect the unique signatures of our voices and financial data; police run still images from surveillance videos through facial recognition algorithms. Beyond genetic analysis, geolocation, and biometrics, researchers raise the possibility of malicious use of brain-computer interfaces, or brain-jacking, looming on the horizon.

That too should concern all of us.

Originally published by Undark Magazine, 12.16.2024, republished with permission for educational, non-commercial purposes.