The history of AGI is a story of aspiration, innovation, and humbling complexity.

By Matthew A. McIntosh

Public Historian

Brewminate

Introduction

Artificial General Intelligence (AGI), often referred to as “strong AI,” is the theoretical form of artificial intelligence that possesses the ability to understand, learn, and apply knowledge across a wide range of tasks at a level equal to or surpassing human intelligence. Unlike narrow AI, which is designed to perform specific tasks (e.g., language translation, playing chess, or facial recognition), AGI implies a machine that can adapt to unfamiliar situations, reason abstractly, and exhibit autonomy in decision-making. While narrow AI has achieved remarkable practical success in recent decades, AGI remains largely an aspirational goal—one that challenges not only technological capabilities but also philosophical, ethical, and cognitive boundaries. This essay traces the history of AGI, from its conceptual roots in ancient philosophy to the modern challenges of its realization in computer science and beyond.

Philosophical and Mythological Antecedents

The desire to create artificial beings with intelligence comparable to humans predates the modern scientific era and finds its origins in the mythologies and philosophies of ancient civilizations. These early conceptions, though not scientific in nature, reveal a deep-seated fascination with the idea of intelligent constructs. In Greek mythology, Hephaestus, the god of fire and craftsmanship, forged automatons of gold to serve the gods on Mount Olympus. Most notable among these creations was Talos, a giant bronze figure who patrolled the island of Crete to protect it from invaders.1 Such stories reflect humanity’s early imaginative attempts to give form to non-human intelligence, often casting them as magical or divine entities. These narratives suggest an early belief that intelligence could be instantiated in non-biological forms, an idea that persists today in the theoretical frameworks surrounding artificial general intelligence (AGI).

Philosophical antecedents to AGI appear prominently in classical and early modern thought, particularly in the work of thinkers grappling with the nature of the mind and the possibility of mechanical reasoning. Plato, for instance, distinguished between the world of forms—immutable and perfect concepts—and the material world, suggesting that true understanding lies in the realm of the abstract.2 This dualism would later be echoed in the mechanistic philosophies of the Enlightenment. In the 17th century, René Descartes argued for a fundamental distinction between the res cogitans (thinking substance) and res extensa (extended substance), establishing the idea that the mind is a non-physical entity capable of reason independent of the body.3 This dualistic framework influenced later attempts to conceptualize intelligence as a function that might be abstracted from the physical brain, laying theoretical groundwork for imagining artificial intelligence.

During the same period, Gottfried Wilhelm Leibniz advanced a vision that was arguably more aligned with modern computational theory. He proposed the development of a “universal characteristic,” a symbolic language that could express all human thought and logical relations.4 His idea of a calculating machine capable of reasoning through symbols anticipated the later development of formal logic and computation. For Leibniz, reason was mechanical in essence—if thinking could be reduced to computation, then machines could, in theory, think. This intellectual leap was critical for future visions of AGI, which posit that mental faculties can be abstracted, formalized, and ultimately replicated in artificial substrates. Leibniz’s contributions suggest a proto-computational view of mind, long before Turing or digital computing emerged.

The Enlightenment also witnessed the proliferation of mechanical automata—ingeniously crafted machines that mimicked life and behavior. These creations, such as Jacques de Vaucanson’s Digesting Duck or the intricate humanoid automata by Pierre Jaquet-Droz, captivated audiences across Europe and reinforced the idea that life and intelligence might be replicated through engineering.5 While these automata did not think, they embodied a vision of the machine as an entity capable of lifelike functions, blurring the line between the organic and the mechanical. This mechanistic worldview supported the notion that, given the right principles and design, intelligence might one day emerge from matter—a theme central to AGI discussions. Such creations demonstrated that human ingenuity could simulate life, and by extension, might one day simulate the mind itself.

Religious and mystical traditions contributed to the antecedents of AGI by exploring the power of knowledge to animate and transform matter. In Jewish mysticism, the legend of the Golem recounts how rabbis, through secret knowledge and divine names, could bring clay figures to life to serve or protect their communities.6 The Golem, though a symbol of divine power, also represents the dangers of unchecked creation—an enduring theme in modern AGI debates. Similarly, alchemical and hermetic traditions posited that understanding the essence of life could grant control over nature itself, including the power to create artificial beings. These narratives underscore a recurring tension: the aspiration to build intelligence, and the fear that such power may escape human control. They presaged many of the ethical and philosophical concerns surrounding AGI today—particularly the need for wisdom and caution in the face of technological creation.

The Birth of Computation and the Roots of AI

The intellectual origins of artificial intelligence are inseparable from the birth of modern computation in the 19th and 20th centuries. Foundational to this development was the work of Charles Babbage and Ada Lovelace, who envisioned machines capable not just of arithmetic calculation but of symbolic reasoning. Babbage’s design for the Analytical Engine, though never fully realized, introduced the essential principles of programmability, memory, and control flow—concepts that would become central to modern computing.7 Ada Lovelace, often considered the first computer programmer, theorized that the Analytical Engine could manipulate symbols and compose music, prefiguring the idea that machines could engage in tasks requiring creativity and logic.8 Her vision challenged the notion that calculation was merely numerical, opening the door to the broader concept of mechanical intelligence. These early insights provided a philosophical and technical blueprint that would be revived in the 20th century, as theorists began to build real digital machines.

The conceptual revolution truly took shape with Alan Turing, whose 1936 paper “On Computable Numbers” introduced the abstract model of the universal Turing machine. Turing demonstrated that any computation could, in principle, be performed by such a machine, establishing a formal basis for digital computation.9 More significantly for AI, Turing proposed that the mind itself could be modeled as a computational system. In his seminal 1950 paper, “Computing Machinery and Intelligence,” Turing posed the provocative question, “Can machines think?” and introduced what came to be known as the Turing Test—a method for evaluating a machine’s capacity to exhibit intelligent behavior indistinguishable from that of a human.10 This work reframed intelligence as a process that might be implemented in non-biological systems, laying the philosophical groundwork for artificial general intelligence. Turing’s model combined the abstract rigor of mathematical logic with bold speculations about machine cognition, forming the intellectual bridge between computation and AI.

Parallel to Turing’s theoretical contributions were developments in logic and formal systems, most notably in the work of Kurt Gödel, Alonzo Church, and others engaged in the foundational debates of mathematics. Church’s lambda calculus and Gödel’s incompleteness theorems demonstrated both the power and limitations of formal systems, while simultaneously showing that logic could encode operations resembling human thought.11 Claude Shannon’s work in information theory during the 1940s also proved crucial, as it mathematically formalized communication and symbol processing, reinforcing the idea that cognition could be reduced to information manipulation.12 These theories found application in early computing machines, such as the ENIAC and later the UNIVAC, which were designed for numerical tasks but increasingly used for symbolic processing. Together, these developments marked a shift from viewing machines as tools of arithmetic to potential agents of reasoning.

The formal establishment of artificial intelligence as a field occurred in 1956 at the Dartmouth Summer Research Project on Artificial Intelligence, organized by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon. This conference coined the term “artificial intelligence” and set the agenda for decades of research: to construct machines capable of human-level learning, reasoning, and perception.13 McCarthy’s development of the LISP programming language soon afterward provided the AI community with a flexible tool for expressing symbolic logic and recursive operations—critical for building early AI programs like the General Problem Solver and ELIZA.14 These early efforts embraced a rule-based approach, assuming that intelligence could be emulated through the systematic application of formal logic. While promising in narrow domains, this “symbolic AI” would later be critiqued for its brittleness and inability to scale to real-world complexity, but at the time, it represented a major step in realizing the dream of thinking machines.

As the 20th century progressed, research in AI expanded rapidly, moving from isolated experiments to more ambitious systems. Advances in hardware and programming languages enabled the development of expert systems in the 1970s and 1980s, which attempted to encode the knowledge and heuristics of human specialists into digital frameworks. These systems, such as MYCIN in medical diagnostics, demonstrated that machines could outperform humans in constrained domains, reinforcing optimism about AI’s future.15 Meanwhile, alternative approaches—such as neural networks inspired by biological models—began to gain traction, although they initially faced technical limitations and theoretical skepticism. The AI community split into factions, with “connectionists” favoring learning through pattern recognition and “symbolic” researchers emphasizing logic-based representations. Despite early setbacks, these divergent threads would later converge in the modern era of deep learning and hybrid systems, rooted in the foundational insights of Babbage, Turing, and the pioneers of computation.

The Rise and Fall of Symbolic AI

The emergence of symbolic artificial intelligence (AI), also known as “Good Old-Fashioned AI” (GOFAI), in the mid-20th century marked a major shift in the pursuit of machine intelligence. Rooted in the assumption that human cognition could be understood and replicated through the manipulation of symbols according to formal logical rules, symbolic AI took inspiration from logic, linguistics, and cognitive psychology. Early pioneers like Allen Newell and Herbert A. Simon developed programs such as the Logic Theorist (1956) and the General Problem Solver (1957), which were designed to mimic human problem-solving by employing explicit rules and goal-directed reasoning.16 These systems were among the first to demonstrate that computers could solve abstract problems when provided with a defined symbolic framework. Their work laid the foundation for a generation of AI research predicated on the assumption that intelligence consisted of symbol processing, much as language is processed through grammar and syntax.

At the core of symbolic AI was the physical symbol system hypothesis, famously articulated by Newell and Simon in 1976. They proposed that a physical symbol system has the necessary and sufficient means for general intelligent action.17 This bold claim placed symbolic manipulation at the heart of cognitive modeling and fueled optimism that AI systems could eventually match or even surpass human reasoning capabilities. AI researchers constructed elaborate knowledge bases and rule-based systems, seeking to encode the semantics of the world into logical structures that a machine could navigate. The development of expert systems in the 1970s and 1980s—such as MYCIN for medical diagnosis and DENDRAL for chemical analysis—demonstrated the practical utility of symbolic reasoning in highly specialized domains.18 These systems used a combination of facts, rules, and inference engines to make decisions and were often able to perform at or above human expert levels in narrow tasks.

However, symbolic AI faced growing challenges as researchers attempted to extend its success beyond well-defined domains. The real world is messy, ambiguous, and often resistant to strict formalization, and symbolic systems struggled to scale accordingly. The process of encoding domain knowledge into rules was labor-intensive and brittle, often requiring thousands of handcrafted rules that failed to generalize across contexts.19 Moreover, these systems lacked the flexibility and adaptability associated with human cognition; they could not learn from new data or infer unstated assumptions as humans routinely do. The frame problem—an issue first articulated by John McCarthy—highlighted the difficulty symbolic systems had in discerning which facts in a vast knowledge base were relevant to a particular reasoning task.20 These limitations exposed a fundamental disconnect between formal logic and the fluidity of real-world intelligence, sparking a crisis in AI research by the late 1980s.

The symbolic paradigm’s decline was further accelerated by the AI winters—periods of reduced funding and interest due to unmet expectations and disappointing results. The first AI winter in the mid-1970s was triggered by the failure of machine translation systems and general-purpose AI to live up to their ambitious promises.21 A second, more severe winter followed in the late 1980s, as expert systems proved fragile in commercial applications and difficult to maintain or update. Disillusionment set in as symbolic AI was increasingly seen as an overly rigid and limited approach to replicating human intelligence. Critics such as Hubert Dreyfus, a philosopher steeped in phenomenology, had long warned that intelligence could not be captured by symbol manipulation alone. In his influential critique What Computers Can’t Do, Dreyfus argued that AI needed to grapple with the embodied and contextual nature of human understanding.22 His ideas, once marginalized, began to gain traction as symbolic approaches faltered.

Despite its decline, symbolic AI left an indelible mark on the history of artificial intelligence. Many of its concepts—such as knowledge representation, inference, and planning—continue to influence AI research, particularly in hybrid systems that combine symbolic reasoning with machine learning. Furthermore, the symbolic legacy survives in areas such as natural language processing, formal verification, and automated theorem proving, where structured logic still plays a crucial role. In recent years, there has been a renewed interest in integrating symbolic methods with data-driven approaches to create more explainable and robust AI systems. The fall of pure symbolic AI was not the end of its story, but rather a transformation, as its insights are now being recontextualized in the era of deep learning and artificial general intelligence.23 Its rise and fall offer a cautionary tale about the limits of reductionism and the evolving complexity of modeling the human mind.

Connectionism and the Revival of Learning

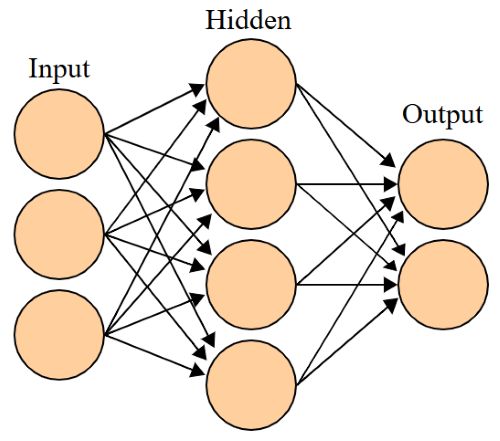

As symbolic artificial intelligence began to falter in the 1980s due to its inflexibility and limited capacity for learning, a long-dormant alternative approach to machine intelligence—connectionism—began to reemerge. Connectionism posits that cognitive processes arise from the interaction of numerous simple processing units, akin to neurons in the human brain. This idea was not new; it could be traced back to the 1943 work of Warren McCulloch and Walter Pitts, who modeled neural activity using Boolean logic to simulate computation in networks of artificial neurons.24 Later, Donald Hebb’s theory of synaptic plasticity in The Organization of Behavior (1949) laid a psychological foundation for learning in neural networks, proposing that connections between neurons strengthen with repeated activation—a principle now encapsulated in the phrase “cells that fire together wire together.”25 These early developments suggested that machines could learn adaptively from data, rather than relying solely on explicit rules, but technical limitations and lack of computational resources delayed the practical realization of these ideas.

The resurgence of connectionist models in the 1980s was fueled by the rediscovery and popularization of backpropagation, an algorithm that allowed multilayer neural networks to adjust internal weights through gradient descent. David Rumelhart, Geoffrey Hinton, and Ronald Williams played a key role in reviving interest in neural networks by demonstrating that backpropagation could train networks to recognize complex patterns and perform nonlinear classifications.26 This capability contrasted sharply with the rigid rule structures of symbolic AI, offering a flexible and adaptive means of learning from experience. Their 1986 publication in Nature marked a watershed moment, re-establishing connectionism as a serious contender in cognitive science and AI. The promise of neural networks lay in their potential to generalize from noisy or incomplete data—much as human cognition often does—offering a form of intelligence not explicitly programmed but emergent from interaction with the environment.

Despite this promise, early neural networks faced skepticism and practical limitations. Critics pointed to their “black box” nature, lack of interpretability, and difficulties in scaling to deeper architectures. Furthermore, theoretical limitations, such as those highlighted by Marvin Minsky and Seymour Papert in Perceptrons (1969), lingered in the academic consciousness.27 Minsky and Papert had shown that single-layer perceptrons could not solve even simple non-linear problems like the XOR function, leading many to dismiss neural networks altogether for over a decade. While backpropagation addressed some of these concerns, neural networks remained relatively shallow and constrained by computational resources throughout the 1990s. Nevertheless, the conceptual shift toward learning systems marked a growing recognition that intelligent behavior required not just logic, but adaptation—an idea that would profoundly shape the future of AI.

Connectionism also found applications in cognitive modeling and psychology, where it offered alternatives to rule-based theories of the mind. Researchers such as James McClelland and David Rumelhart developed the Parallel Distributed Processing (PDP) framework, arguing that cognition arises from the interaction of distributed patterns of activation across networks.28 This view aligned with neuroscientific discoveries about brain function and provided models for phenomena such as memory, language acquisition, and perception. Connectionist models challenged the dominant computational theory of mind by emphasizing emergence over explicit representation. Although the debate between symbolic and connectionist paradigms persisted, a growing number of scholars saw potential in integrating both approaches. Hybrid systems began to appear, combining the rule-based precision of symbolic logic with the adaptive learning of neural networks—a precursor to the integrated architectures of modern AI.

By the early 2000s, advances in hardware—especially the rise of GPUs—and the availability of large datasets ushered in a new era of deep learning, allowing for the construction of much deeper and more capable neural networks. The revival of learning-based systems gained irreversible momentum, culminating in breakthroughs in image recognition, natural language processing, and game playing. Neural networks evolved from theoretical curiosities to practical tools, underpinning services from facial recognition to autonomous vehicles. The success of deep learning models such as convolutional and recurrent neural networks demonstrated the scalability and power of connectionist approaches, vindicating decades of work by pioneers in the field. While debates over interpretability and generalization continue, the revival of learning through connectionism has decisively reshaped the field of AI, laying a foundation for ongoing efforts to achieve artificial general intelligence.29

The Emergence of AGI as a Distinct Subfield

By the early 2000s, as artificial intelligence (AI) matured into a multifaceted discipline encompassing everything from expert systems to deep learning, a growing number of researchers began to distinguish between narrow AI and the pursuit of artificial general intelligence (AGI)—a system capable of performing any intellectual task that a human can. While the broader AI community was preoccupied with specialized applications, a smaller group of thinkers revived the foundational dream of building machines with human-level cognitive flexibility. This vision, once assumed to be the overarching goal of AI, had been sidelined during the pragmatic shift toward narrowly defined tasks. However, the limitations of narrow AI—including its brittleness, lack of common sense reasoning, and inability to transfer knowledge across domains—motivated researchers to revisit the question of how to build a general-purpose mind.30 The new AGI subfield sought to explicitly address these challenges and provide unified frameworks for intelligence.

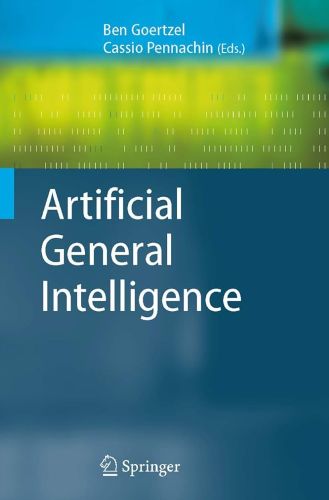

A landmark in this resurgence was the 2005 AGI Workshop at the University of Memphis, which formally introduced the term “Artificial General Intelligence” as a distinct research trajectory. Organized by Ben Goertzel and Cassio Pennachin, the workshop brought together a group of interdisciplinary scholars—including computer scientists, cognitive scientists, and philosophers—who saw the need to differentiate their work from the prevailing narrow AI paradigm.31 Goertzel and Pennachin later published Artificial General Intelligence (2007), one of the first volumes to define the theoretical scope and technical challenges of the field.32 The emergence of AGI as a term and concept allowed researchers to regroup under a shared identity and explore models that focused on transfer learning, self-reflection, memory integration, and goal-directed behavior. This framing allowed AGI to gain traction as an intellectual movement despite initial skepticism from the mainstream AI community.

AGI research drew from a broad range of inspirations, including cognitive architectures, developmental psychology, and formal models of intelligence. Architectures like SOAR, ACT-R, and Goertzel’s own OpenCog aimed to emulate the integrated nature of human cognition by combining modules for perception, action, reasoning, and memory.33 Similarly, Marcus Hutter’s AIXI model proposed a formal mathematical theory of general intelligence based on algorithmic probability and decision theory, offering a rigorous benchmark—albeit an uncomputable one—for assessing AGI systems.34 While such models varied in scope and feasibility, they shared a commitment to understanding intelligence as a unified phenomenon rather than a collection of isolated skills. This theoretical ambition stood in stark contrast to the fragmented successes of narrow AI, and although AGI research remained marginal for much of the 2000s, it laid important conceptual groundwork for future advances.

The deep learning revolution of the 2010s unexpectedly breathed new life into AGI discourse. While initially framed as tools for narrow tasks, large-scale neural networks such as transformers began to exhibit emergent capabilities that crossed domain boundaries. Models like OpenAI’s GPT series, Google’s BERT, and DeepMind’s AlphaZero displayed generalization, language understanding, and problem-solving abilities once thought out of reach for narrow AI.35 These developments prompted a renewed debate about whether the long-term goal of AGI was finally within reach. While many researchers remained cautious, others argued that scaling current methods might lead, if not to true general intelligence, then to systems functionally equivalent to it. The release of GPT-3 in 2020 and GPT-4 in 2023 marked turning points in public and academic awareness of AGI as a credible near-term objective.

Today, AGI is increasingly recognized as a distinct and urgent domain within AI research, attracting both enthusiasm and concern. Institutions like OpenAI, DeepMind, and Anthropic have made AGI development their explicit mission, while governments and ethics boards grapple with the potential risks of human-level machine intelligence. The field now intersects with pressing questions about safety, alignment, interpretability, and the social impact of transformative technologies.36 Researchers are exploring ways to ensure that AGI systems behave ethically and reliably, even in unforeseen contexts. The very notion of general intelligence—once considered speculative or philosophical—is now an active site of empirical, computational, and ethical inquiry. As AGI continues to evolve from a fringe aspiration to a serious research program, it reflects the enduring human desire to understand and replicate the mind itself.

Deep Learning and Modern Approaches to AGI since 2010

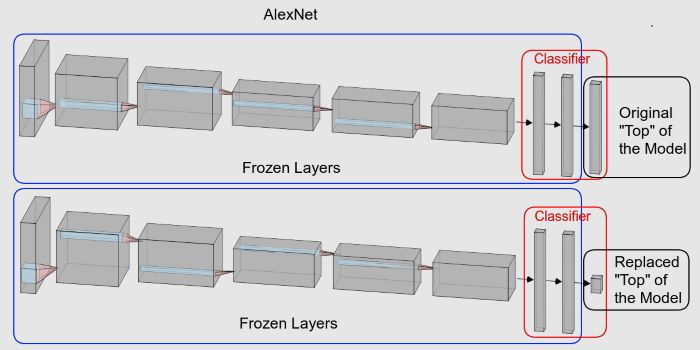

The 2010s marked a profound transformation in artificial intelligence with the resurgence and expansion of deep learning, a class of algorithms rooted in multi-layered artificial neural networks. Though neural network research dates back decades, breakthroughs in computational power (especially GPUs), access to massive datasets, and improved algorithms led to a dramatic leap in performance. The pivotal moment came in 2012, when Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton used a deep convolutional neural network—AlexNet—to achieve unprecedented accuracy in the ImageNet competition, a benchmark for object recognition.37 This victory catalyzed industry-wide adoption of deep learning and inspired further research into architectures like recurrent neural networks (RNNs), long short-term memory (LSTM) networks, and eventually, transformer-based models. The focus shifted from hand-engineering features to enabling models to learn features directly from raw data, establishing a new paradigm in machine learning and setting the stage for serious progress toward artificial general intelligence (AGI).

Deep learning’s success extended beyond image recognition. Recurrent and attention-based networks revolutionized natural language processing (NLP), culminating in the development of the transformer architecture introduced by Vaswani et al. in 2017.38 Transformers, particularly when scaled, could process entire sequences in parallel and learn contextual relationships more effectively than their predecessors. These models formed the foundation of OpenAI’s GPT series, Google’s BERT and T5, and Meta’s LLaMA—all of which demonstrated remarkable abilities in text generation, translation, summarization, and reasoning. The development of large language models (LLMs) such as GPT-3 and GPT-4 further blurred the line between narrow and general intelligence, showcasing emergent behaviors like in-context learning, few-shot prompting, and generalization across tasks.39 While these models were still brittle and dependent on vast data corpora, they introduced new pathways to AGI by exhibiting capabilities once reserved for human cognition.

One of the key modern approaches to AGI is scaling theory, which posits that larger models trained with more data and compute power can continue to improve in performance, approaching or achieving general intelligence as a function of scale. This hypothesis, largely validated by the performance gains seen from GPT-2 to GPT-4, suggests that intelligence may be an emergent property of sufficient model complexity rather than the result of specific architectural innovations.40 Researchers at OpenAI and Anthropic, among others, have shown that increasing parameters leads to qualitatively new capabilities, including reasoning, coding, and common-sense inference. These observations have sparked debates over whether current deep learning approaches will “scale to AGI” or whether new principles will be needed. Nevertheless, scaling laws and empirical performance have dominated the research agenda, guiding much of the recent innovation and investment in the field.

Beyond scaling, researchers are exploring multimodal models that integrate different types of data—text, images, audio, and even video—into a single framework. DeepMind’s Gato, OpenAI’s CLIP and DALL·E, and Google DeepMind’s Gemini all represent efforts to build more unified models capable of interacting with the world in complex, flexible ways.41 These systems mimic the human brain’s ability to synthesize information from multiple sensory modalities, enabling more general reasoning and creative problem-solving. Additionally, reinforcement learning from human feedback (RLHF) and preference modeling have introduced ways to align AI behavior with human values and intentions, addressing early concerns about control and misuse. By training agents not only to perform tasks but to do so in accordance with human preferences, modern AGI research now embraces both technical advancement and safety as complementary goals.

Despite these advances, modern deep learning approaches to AGI face ongoing challenges. These include issues of interpretability, robustness, and alignment, as well as concerns over bias, hallucination, and overfitting. Critics argue that without a principled understanding of intelligence and cognition, scaling may reach diminishing returns or fail catastrophically in real-world environments. Others believe that the current wave of LLMs and multimodal models may form the substrate of AGI, particularly if paired with memory systems, meta-learning, and agentic planning frameworks.42 The growing convergence of machine learning, cognitive science, neuroscience, and philosophy reflects a maturing of AGI as a serious interdisciplinary endeavor. While the exact timeline and nature of AGI remain uncertain, deep learning has undoubtedly redefined the frontier of possibility, bringing the dream of general machine intelligence closer to reality than ever before.

The Current State and Future Prospects of Artificial General Intelligence

The present landscape of artificial general intelligence (AGI) research is characterized by a blend of optimism, skepticism, and growing institutional investment. Major technology companies—including OpenAI, DeepMind, Anthropic, and Meta—have declared AGI development as an explicit goal, signaling a profound shift in priorities from task-specific artificial intelligence to general-purpose cognitive systems. The unveiling of highly capable large language models (LLMs) such as OpenAI’s GPT-4 and Google DeepMind’s Gemini has led many to believe that AGI is no longer a distant aspiration but a plausible near- or medium-term reality.43 These models, trained on massive corpora and refined through techniques like reinforcement learning from human feedback (RLHF), exhibit abilities in language comprehension, reasoning, translation, summarization, and even basic programming. Their emergence has sparked renewed debates over what constitutes AGI, and whether the boundary between “narrow” and “general” is dissolving as model capabilities increase.

Despite recent progress, there is still no consensus on the definition of AGI, nor a standardized metric for assessing when it has been achieved. Some researchers adopt a functionalist criterion—arguing that any system capable of performing a wide range of tasks at human-level competence qualifies—while others insist on more stringent criteria including self-awareness, theory of mind, or physical embodiment.44 This ambiguity complicates both theoretical work and policy planning. Nevertheless, the continued development of multimodal agents and tool-using systems capable of integrating diverse forms of knowledge suggests that AGI is approaching a threshold where meaningful comparisons to human intelligence can be made. In parallel, advancements in memory augmentation, context persistence, and meta-learning indicate that current models are inching toward a more unified and flexible intelligence architecture.45

The future of AGI research also hinges on the alignment problem: ensuring that advanced AI systems act in accordance with human values and intentions. This has become a central concern within the community, prompting initiatives focused on safety, interpretability, and oversight. Organizations like the Alignment Research Center, the Center for AI Safety, and research teams within OpenAI and DeepMind are exploring how to make future AGI systems corrigible, transparent, and verifiably beneficial.46 Proposed solutions range from scalable oversight methods to constitutional AI frameworks and value learning algorithms. However, many of these approaches remain in the experimental phase, and the difficulty of aligning systems that surpass human capabilities has not been resolved. As such, alignment is increasingly regarded as both a technical and philosophical frontier—one that may determine whether AGI leads to unprecedented progress or catastrophic failure.

The geopolitical implications of AGI are equally profound. As nations recognize the transformative potential of AGI for economic, military, and strategic advantage, international competition in AI capabilities has intensified. China, the United States, and the European Union are investing heavily in foundational models, compute infrastructure, and AI talent pipelines.47 This race has triggered calls for international regulatory bodies akin to the International Atomic Energy Agency to monitor and control the development of potentially dangerous AGI systems. Concurrently, academic and civil society voices urge for global cooperation and democratic governance of AGI, advocating frameworks that promote safety, fairness, and inclusivity. The current absence of comprehensive regulation has led to a patchwork of policy initiatives, but consensus is forming around the need for anticipatory governance, particularly as models approach general-level competence.

Looking forward, the future of AGI remains both promising and uncertain. Some researchers anticipate the emergence of AGI within the next decade, while others caution that significant theoretical and engineering breakthroughs are still required.48 Whether AGI will resemble current LLM architectures scaled further or emerge from entirely novel paradigms—such as neurosymbolic systems, quantum computing, or brain-machine interfaces—remains an open question. Regardless of the path, the pursuit of AGI is no longer confined to speculative thought but constitutes an urgent and active domain of scientific inquiry. It invites participation from across disciplines—neuroscience, philosophy, ethics, computer science, and public policy—and compels society to prepare for the unprecedented consequences of building minds that may rival or surpass our own.

Conclusion

The history of AGI is a story of aspiration, innovation, and humbling complexity. From mythical automata and philosophical thought experiments to modern neural networks and global research initiatives, the pursuit of AGI reflects humanity’s deepest questions about intelligence, consciousness, and the essence of being. While we have made enormous strides in simulating aspects of intelligence, the holy grail of AGI—a system that truly thinks, understands, and reasons as we do—remains just over the horizon. Whether AGI will be achieved in decades or centuries—or whether it is even possible—remains unknown. But the journey toward it continues to reshape our understanding of minds, machines, and ourselves.

Appendix

Endnotes

- Jan Bremmer, The Early Greek Concept of the Soul (Princeton: Princeton University Press, 1983), 122–25.

- Plato, Phaedrus, trans. Robin Waterfield (Oxford: Oxford University Press, 2002), 245c–249d.

- René Descartes, Meditations on First Philosophy, trans. John Cottingham (Cambridge: Cambridge University Press, 1996), Meditation II.

- Gottfried Wilhelm Leibniz, Philosophical Essays, ed. and trans. Roger Ariew and Daniel Garber (Indianapolis: Hackett Publishing Company, 1989), 5–8.

- Jessica Riskin, The Restless Clock: A History of the Centuries-Long Argument over What Makes Living Things Tick (Chicago: University of Chicago Press, 2016), 89–93.

- Moshe Idel, Golem: Jewish Magical and Mystical Traditions on the Artificial Anthropoid (Albany: SUNY Press, 1990), 34–37.

- Doron Swade, The Difference Engine: Charles Babbage and the Quest to Build the First Computer (New York: Viking, 2000), 101–105.

- Betty Alexandra Toole, Ada, the Enchantress of Numbers: Prophet of the Computer Age (Mill Valley: Strawberry Press, 1992), 224–226.

- Alan Turing, “On Computable Numbers, with an Application to the Entscheidungsproblem,” Proceedings of the London Mathematical Society 2, no. 42 (1936): 230–265.

- Alan Turing, “Computing Machinery and Intelligence,” Mind 59, no. 236 (1950): 433–460.

- Martin Davis, Engines of Logic: Mathematicians and the Origin of the Computer (New York: W.W. Norton, 2000), 112–118.

- Claude Shannon, “A Mathematical Theory of Communication,” Bell System Technical Journal 27, no. 3 (1948): 379–423.

- Nils J. Nilsson, The Quest for Artificial Intelligence: A History of Ideas and Achievements (Cambridge: Cambridge University Press, 2010), 63–67.

- John McCarthy, “Recursive Functions of Symbolic Expressions and Their Computation by Machine, Part I,” Communications of the ACM 3, no. 4 (1960): 184–195.

- Edward Feigenbaum, The Fifth Generation: Artificial Intelligence and Japan’s Computer Challenge to the World (Reading, MA: Addison-Wesley, 1983), 75–79.

- Allen Newell and Herbert A. Simon, “The Logic Theory Machine: A Complex Information Processing System,” IRE Transactions on Information Theory 2, no. 3 (1956): 61–79.

- Allen Newell and Herbert A. Simon, Human Problem Solving (Englewood Cliffs, NJ: Prentice-Hall, 1972), 4.

- Bruce G. Buchanan and Edward H. Shortliffe, Rule-Based Expert Systems: The MYCIN Experiments of the Stanford Heuristic Programming Project (Reading, MA: Addison-Wesley, 1984), 15–28.

- Nils J. Nilsson, Artificial Intelligence: A New Synthesis (San Francisco: Morgan Kaufmann, 1998), 89–91.

- John McCarthy and Patrick J. Hayes, “Some Philosophical Problems from the Standpoint of Artificial Intelligence,” in Machine Intelligence 4, ed. Donald Michie and Bernard Meltzer (Edinburgh: Edinburgh University Press, 1969), 463–502.

- Pamela McCorduck, Machines Who Think: A Personal Inquiry into the History and Prospects of Artificial Intelligence, 2nd ed. (Natick, MA: A.K. Peters, 2004), 329–334.

- Hubert L. Dreyfus, What Computers Can’t Do: A Critique of Artificial Reason (New York: Harper & Row, 1972), 147–149.

- Gary Marcus, Rebooting AI: Building Artificial Intelligence We Can Trust (New York: Pantheon Books, 2019), 53–58.

- Warren S. McCulloch and Walter Pitts, “A Logical Calculus of the Ideas Immanent in Nervous Activity,” The Bulletin of Mathematical Biophysics 5, no. 4 (1943): 115–133.

- Donald O. Hebb, The Organization of Behavior: A Neuropsychological Theory (New York: Wiley, 1949), 62–78.

- David E. Rumelhart, Geoffrey E. Hinton, and Ronald J. Williams, “Learning Representations by Back-Propagating Errors,” Nature 323, no. 6088 (1986): 533–536.

- Marvin Minsky and Seymour Papert, Perceptrons: An Introduction to Computational Geometry (Cambridge, MA: MIT Press, 1969), 88–97.

- James L. McClelland and David E. Rumelhart, eds., Parallel Distributed Processing: Explorations in the Microstructure of Cognition, vol. 1 (Cambridge, MA: MIT Press, 1986), 3–24.

- Yann LeCun, Yoshua Bengio, and Geoffrey Hinton, “Deep Learning,” Nature 521, no. 7553 (2015): 436–444.

- Shane Legg and Marcus Hutter, “A Collection of Definitions of Intelligence,” Frontiers in Artificial Intelligence and Applications 157 (2007): 17–24.

- Ben Goertzel and Cassio Pennachin, “The AI in AGI: Narrow vs. General Intelligence,” in Artificial General Intelligence: Proceedings of the 2005 AGI Workshop, ed. Ben Goertzel and Cassio Pennachin (Memphis: Cognitive Technologies, 2005), 1–10.

- Ben Goertzel and Cassio Pennachin, eds., Artificial General Intelligence (Berlin: Springer, 2007).

- John E. Laird, The Soar Cognitive Architecture (Cambridge, MA: MIT Press, 2012); Ben Goertzel et al., “The Architecture of OpenCog,” in Biologically Inspired Cognitive Architectures, ed. Alexei Samsonovich and Ben Goertzel (Amsterdam: IOS Press, 2010), 170–175.

- Marcus Hutter, Universal Artificial Intelligence: Sequential Decisions Based on Algorithmic Probability (Berlin: Springer, 2005).

- Tom B. Brown et al., “Language Models are Few-Shot Learners,” Advances in Neural Information Processing Systems 33 (2020): 1877–1901; Demis Hassabis et al., “Mastering the Game of Go with Deep Neural Networks and Tree Search,” Nature 529, no. 7587 (2016): 484–489.

- Jan Leike et al., “Scalable Agent Alignment via Reward Modeling: A Research Agenda,” arXiv preprint arXiv:1811.07871 (2018).

- Alex Krizhevsky, Ilya Sutskever, and Geoffrey E. Hinton, “ImageNet Classification with Deep Convolutional Neural Networks,” Communications of the ACM 60, no. 6 (2017): 84–90.

- Ashish Vaswani et al., “Attention Is All You Need,” Advances in Neural Information Processing Systems 30 (2017): 5998–6008.

- Tom B. Brown et al., “Language Models Are Few-Shot Learners,” 1877–1901.

- Jared Kaplan et al., “Scaling Laws for Neural Language Models,” arXiv preprint arXiv:2001.08361 (2020).

- Scott Reed et al., “A Generalist Agent,” arXiv preprint arXiv:2205.06175 (2022); Alec Radford et al., “Learning Transferable Visual Models from Natural Language Supervision,” International Conference on Machine Learning (2021); OpenAI, “Introducing GPT-4,” OpenAI Blog, March 14, 2023.

- Jan Leike et al., “Superhuman AI for Multiplayer Online Battle Arena Games,” Science 364, no. 6443 (2019): 885–890.

- OpenAI, “Introducing GPT-4,” OpenAI Blog, March 14, 2023, https://openai.com/research/gpt-4.

- Shane Legg and Marcus Hutter, “A Collection of Definitions of Intelligence,” 17–24.

- Jacob Andreas, “Language Models as Agents,” arXiv preprint arXiv:2305.10601 (2023).

- Paul Christiano et al., “Deep Reinforcement Learning from Human Preferences,” Advances in Neural Information Processing Systems 30 (2017): 4299–4307; Jan Leike et al., “Scalable Agent Alignment via Reward Modeling,” arXiv preprint arXiv:1811.07871 (2018).

- Elsa B. Kania, “AI Weapons and China’s Military Innovation,” Brookings Institution Report, April 2020; Andrea Renda, “Artificial Intelligence: Ethics, Governance and Policy Challenges,” European Parliament Research Service, June 2019.

- Gary Marcus, “Deep Learning Is Hitting a Wall,” MIT Technology Review, May 2022, https://www.technologyreview.com/2022/05/05/1051864/gary-marcus-deep-learning-is-hitting-a-wall/.

Bibliography

- Andreas, Jacob. “Language Models as Agents.” arXiv preprint arXiv:2305.10601 (2023).

- Bremmer, Jan. The Early Greek Concept of the Soul. Princeton: Princeton University Press, 1983.

- Brown, Tom B., et al. “Language Models are Few-Shot Learners.” Advances in Neural Information Processing Systems 33 (2020): 1877–1901.

- Buchanan, Bruce G., and Edward H. Shortliffe. Rule-Based Expert Systems: The MYCIN Experiments of the Stanford Heuristic Programming Project. Reading, MA: Addison-Wesley, 1984.

- Christiano, Paul, et al. “Deep Reinforcement Learning from Human Preferences.” Advances in Neural Information Processing Systems 30 (2017): 4299–4307.

- Davis, Martin. Engines of Logic: Mathematicians and the Origin of the Computer. New York: W.W. Norton, 2000.

- Descartes, René. Meditations on First Philosophy. Translated by John Cottingham. Cambridge: Cambridge University Press, 1996.

- Dreyfus, Hubert L. What Computers Can’t Do: A Critique of Artificial Reason. New York: Harper & Row, 1972.

- Feigenbaum, Edward. The Fifth Generation: Artificial Intelligence and Japan’s Computer Challenge to the World. Reading, MA: Addison-Wesley, 1983.

- Goertzel, Ben, and Cassio Pennachin, eds. Artificial General Intelligence. Berlin: Springer, 2007.

- Goertzel, Ben, and Cassio Pennachin. “The AI in AGI: Narrow vs. General Intelligence.” In Artificial General Intelligence: Proceedings of the 2005 AGI Workshop, 1–10. Memphis: Cognitive Technologies, 2005.

- Goertzel, Ben, et al. “The Architecture of OpenCog.” In Biologically Inspired Cognitive Architectures, edited by Alexei Samsonovich and Ben Goertzel, 170–175. Amsterdam: IOS Press, 2010.

- Hassabis, Demis, et al. “Mastering the Game of Go with Deep Neural Networks and Tree Search.” Nature 529, no. 7587 (2016): 484–489.

- Hebb, Donald O. The Organization of Behavior: A Neuropsychological Theory. New York: Wiley, 1949.

- Hutter, Marcus. Universal Artificial Intelligence: Sequential Decisions Based on Algorithmic Probability. Berlin: Springer, 2005.

- Idel, Moshe. Golem: Jewish Magical and Mystical Traditions on the Artificial Anthropoid. Albany: SUNY Press, 1990.

- Kania, Elsa B. “AI Weapons and China’s Military Innovation.” Brookings Institution Report. April 2020.

- Kaplan, Jared, et al. “Scaling Laws for Neural Language Models.” arXiv preprint arXiv:2001.08361 (2020).

- Krizhevsky, Alex, Ilya Sutskever, and Geoffrey E. Hinton. “ImageNet Classification with Deep Convolutional Neural Networks.” Communications of the ACM 60, no. 6 (2017): 84–90.

- Laird, John E. The Soar Cognitive Architecture. Cambridge, MA: MIT Press, 2012.

- LeCun, Yann, Yoshua Bengio, and Geoffrey Hinton. “Deep Learning.” Nature 521, no. 7553 (2015): 436–444.

- Leibniz, Gottfried Wilhelm. Philosophical Essays. Edited and translated by Roger Ariew and Daniel Garber. Indianapolis: Hackett Publishing Company, 1989.

- Leike, Jan, et al. “Scalable Agent Alignment via Reward Modeling: A Research Agenda.” arXiv preprint arXiv:1811.07871 (2018).

- Leike, Jan, et al. “Superhuman AI for Multiplayer Online Battle Arena Games.” Science 364, no. 6443 (2019): 885–890.

- Legg, Shane, and Marcus Hutter. “A Collection of Definitions of Intelligence.” Frontiers in Artificial Intelligence and Applications 157 (2007): 17–24.

- Marcus, Gary. “Deep Learning Is Hitting a Wall.” MIT Technology Review. May 2022. https://www.technologyreview.com/2022/05/05/1051864/gary-marcus-deep-learning-is-hitting-a-wall/.

- Marcus, Gary. Rebooting AI: Building Artificial Intelligence We Can Trust. New York: Pantheon Books, 2019.

- McCarthy, John. “Recursive Functions of Symbolic Expressions and Their Computation by Machine, Part I.” Communications of the ACM 3, no. 4 (1960): 184–195.

- McCarthy, John, and Patrick J. Hayes. “Some Philosophical Problems from the Standpoint of Artificial Intelligence.” In Machine Intelligence 4, edited by Donald Michie and Bernard Meltzer, 463–502. Edinburgh: Edinburgh University Press, 1969.

- McClelland, James L., and David E. Rumelhart, eds. Parallel Distributed Processing: Explorations in the Microstructure of Cognition. Vol. 1. Cambridge, MA: MIT Press, 1986.

- McCorduck, Pamela. Machines Who Think: A Personal Inquiry into the History and Prospects of Artificial Intelligence. 2nd ed. Natick, MA: A.K. Peters, 2004.

- McCulloch, Warren S., and Walter Pitts. “A Logical Calculus of the Ideas Immanent in Nervous Activity.” The Bulletin of Mathematical Biophysics 5, no. 4 (1943): 115–133.

- Minsky, Marvin, and Seymour Papert. Perceptrons: An Introduction to Computational Geometry. Cambridge, MA: MIT Press, 1969.

- Newell, Allen, and Herbert A. Simon. “The Logic Theory Machine: A Complex Information Processing System.” IRE Transactions on Information Theory 2, no. 3 (1956): 61–79.

- Newell, Allen, and Herbert A. Simon. Human Problem Solving. Englewood Cliffs, NJ: Prentice-Hall, 1972.

- Nilsson, Nils J. Artificial Intelligence: A New Synthesis. San Francisco: Morgan Kaufmann, 1998.

- Nilsson, Nils J. The Quest for Artificial Intelligence: A History of Ideas and Achievements. Cambridge: Cambridge University Press, 2010.

- OpenAI. “Introducing GPT-4.” OpenAI Blog. March 14, 2023. https://openai.com/research/gpt-4.

- Plato. Phaedrus. Translated by Robin Waterfield. Oxford: Oxford University Press, 2002.

- Radford, Alec, et al. “Learning Transferable Visual Models from Natural Language Supervision.” International Conference on Machine Learning (2021).

- Reed, Scott, et al. “A Generalist Agent.” arXiv preprint arXiv:2205.06175 (2022).

- Renda, Andrea. “Artificial Intelligence: Ethics, Governance and Policy Challenges.” European Parliament Research Service. June 2019.

- Riskin, Jessica. The Restless Clock: A History of the Centuries-Long Argument over What Makes Living Things Tick. Chicago: University of Chicago Press, 2016.

- Rumelhart, David E., Geoffrey E. Hinton, and Ronald J. Williams. “Learning Representations by Back-Propagating Errors.” Nature 323, no. 6088 (1986): 533–536.

- Shannon, Claude. “A Mathematical Theory of Communication.” Bell System Technical Journal 27, no. 3 (1948): 379–423.

- Swade, Doron. The Difference Engine: Charles Babbage and the Quest to Build the First Computer. New York: Viking, 2000.

- Toole, Betty Alexandra. Ada, the Enchantress of Numbers: Prophet of the Computer Age. Mill Valley: Strawberry Press, 1992.

- Turing, Alan. “Computing Machinery and Intelligence.” Mind 59, no. 236 (1950): 433–460.

- Turing, Alan. “On Computable Numbers, with an Application to the Entscheidungsproblem.” Proceedings of the London Mathematical Society 2, no. 42 (1936): 230–265.

- Vaswani, Ashish, et al. “Attention Is All You Need.” Advances in Neural Information Processing Systems 30 (2017): 5998–6008.

Originally published by Brewminate, 05.26.2025, under the terms of a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International license.