Neural networks matured into deep learning, Bayesian reasoning undergirds modern probabilistic AI, and reinforcement learning powers today’s autonomous agents.

By Matthew A. McIntosh

Public Historian

Brewminate

Introduction

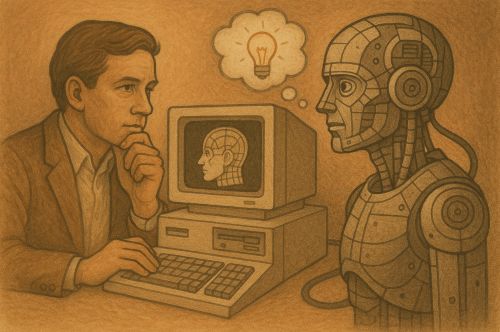

The 1980s marked one of the most ambitious decades in the modern history of artificial intelligence. What had begun in the postwar period as a speculative research program in logic and computation now burst onto the global stage as a matter of government policy, corporate investment, and entrepreneurial daring. The promise of expert systems, the rediscovery of neural networks, and the glamour of robotics all converged in a climate of exuberant expectation. Yet this same climate, fed by hype and strategic rivalry, also laid the groundwork for disillusionment.

The “AI boom” of the 1980s was not simply a technical phenomenon but a cultural one. It inspired headlines, attracted venture capital, provoked fears of job automation, and seeped into popular culture through science fiction films and mass-media speculation. At its core was a belief that human reasoning could be mechanized, scaled, and deployed in commerce, medicine, and warfare. The boom produced real technical breakthroughs, but its legacies are also lessons in the limits of enthusiasm, the fragility of markets, and the difficulty of encoding intelligence into machines.

Expert Systems and the Knowledge Revolution

The expert system was the workhorse of the boom. Designed to capture the decision-making processes of human specialists, these systems used “if-then” production rules stored in knowledge bases. The most famous early example, MYCIN, developed at Stanford, advised physicians on bacterial infections. Though never deployed clinically, MYCIN demonstrated that reasoning about uncertainty could be formalized using confidence factors.1

Commercialization followed rapidly. Digital Equipment Corporation adopted XCON (also known as R1), an expert system that configured orders for its complex VAX computers. By the mid-1980s XCON contained thousands of rules and was credited with saving DEC millions annually.2 Similar systems proliferated in finance, oil exploration, and customer service. Shell Oil’s PROSPECTOR helped analyze mineral deposits, while banks experimented with systems for credit assessment.

This period was heralded as the “knowledge revolution.” Rather than pursue grandiose “general problem solvers” as in the 1960s, AI researchers argued that knowledge was power. Expert system shells such as OPS5, EMYCIN, and later NASA’s CLIPS allowed developers to create domain-specific applications. The emphasis on applied knowledge gave AI a new pragmatic credibility. Yet the brittleness of expert systems soon became apparent: rules could not easily cope with ambiguity, scaling was cumbersome, and maintenance required armies of knowledge engineers.

Government Funding and Strategic Visions

The AI boom coincided with geopolitical anxieties. In 1982, Japan launched the Fifth Generation Computer Systems Project (FGCS), a ten-year plan to build parallel machines capable of logical inference in natural language. The project, coordinated by the Ministry of International Trade and Industry, promised not just faster computers but a leap toward reasoning machines.3

This initiative alarmed policymakers in the West. DARPA responded with the Strategic Computing Initiative (1983), investing hundreds of millions into AI for autonomous vehicles, pilot’s assistants, and battle management. The Alvey Programme in the UK similarly sought to revitalize British computing by linking AI with VLSI hardware and software engineering. For a time, artificial intelligence became a proxy battleground for national prestige — a digital equivalent of the space race.

The results were mixed. The FGCS produced advances in parallel logic programming but fell short of its promise of human-like reasoning. DARPA’s ambitious goals, such as self-navigating tanks and autonomous fighter aircraft, exceeded the capacity of brittle AI software and limited computing power. Yet these investments institutionalized AI research, creating laboratories, careers, and networks that endured even after the hype cooled.

Connectionism and Neural Networks

While expert systems consumed funding, another revolution was brewing: connectionism. Long marginalized after the critiques of Minsky and Papert in Perceptrons (1969), neural networks were revived by the discovery of the backpropagation algorithm. In 1986, David Rumelhart, Geoffrey Hinton, and Ronald Williams showed that multi-layer perceptrons could learn representations by adjusting weights through error propagation.4

The publication of Parallel Distributed Processing by Rumelhart, McClelland, and Hinton in the same year became a manifesto for connectionism. Networks could model cognitive phenomena such as word recognition and grammar acquisition, challenging the dominance of rule-based symbolic AI. Though computational limits prevented large-scale deployment, neural nets attracted a younger generation of researchers intrigued by their biological plausibility. The seeds planted in the 1980s would blossom decades later in the deep learning revolution.

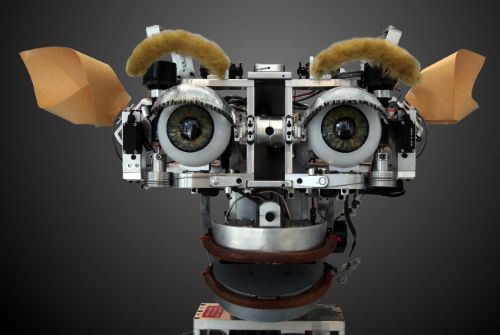

Robotics and Embodied Reason

The 1980s also saw the rise of robotics as a testbed for artificial intelligence. While industrial robots had been in use since the 1960s, most were rigidly programmed machines. Researchers such as Rodney Brooks at MIT advocated a different approach: embodied intelligence, where adaptive behavior emerged from interaction with the environment rather than centralized symbolic planning.5

Robots such as Allen and Genghis demonstrated insect-like navigation through layered control architectures. This bottom-up style challenged the high-level planning emphasis of symbolic AI. Meanwhile, in Japan, industrial robotics integrated fuzzy logic controllers, producing more flexible responses on assembly lines. Though limited in their “intelligence,” these developments shifted AI toward embodiment, laying groundwork for the autonomous systems of later decades.

Probabilistic Methods and Bayesian Networks

Another departure from brittle rules came with soft computing and probabilistic methods. Lotfi Zadeh’s fuzzy logic, though formulated in the 1960s, found wide application in Japan during the 1980s, particularly in consumer electronics. Fuzzy controllers were installed in washing machines, cameras, and subway braking systems, marketed as “smart” appliances.6

Simultaneously, Judea Pearl’s work on Bayesian networks reframed reasoning under uncertainty. By representing probabilistic dependencies as graphs, Pearl demonstrated that complex diagnostic and causal reasoning could be computed rigorously.7 This marked a major epistemological shift in AI: uncertainty was no longer a weakness but a central feature of intelligent reasoning.

Reinforcement Learning

The 1980s also witnessed foundational work in reinforcement learning. Inspired by psychology and control theory, Richard Sutton and Andrew Barto developed algorithms whereby agents could learn through trial-and-error interactions, guided by rewards. Their framework, later formalized in Sutton and Barto’s Reinforcement Learning: An Introduction, provided a new paradigm for adaptive behavior.8 Though initially confined to toy problems, reinforcement learning offered a vision of agents that could improve autonomously rather than rely on hand-coded rules.

Case Studies: Startups and Unicorns

Symbolics and Lisp Machines

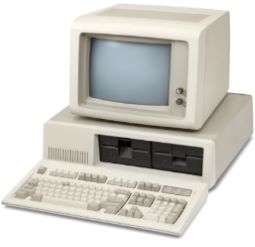

Perhaps no firm symbolized the AI boom more than Symbolics, founded in 1980 to market Lisp machines optimized for AI programming. Symbolics briefly enjoyed success, selling workstations to research labs and companies building expert systems. Its valuation soared, and it became the first company to register a .com domain in 1985. Yet as general-purpose UNIX workstations grew cheaper and more powerful, the specialized Lisp machine market collapsed. Symbolics declared bankruptcy in the 1990s, a cautionary tale of hype meeting hardware reality.9

Thinking Machines Inc.

Founded in 1983 by Danny Hillis, Thinking Machines Inc. sought to realize massively parallel computing through its Connection Machine. With striking black cube aesthetics and marketing flair, the Connection Machine was celebrated as the hardware embodiment of connectionism. Backed by DARPA and venture capital, the company became a darling of the AI boom. Yet its hardware proved expensive and niche; while it contributed to research in physics and cryptography, it failed to revolutionize AI as promised. By the mid-1990s, Thinking Machines filed for bankruptcy.10

Teknowledge and IntelliCorp

Expert system consultancies such as Teknowledge and IntelliCorp went public in the early 1980s, with share prices soaring amid AI euphoria. They provided shells and tools for building knowledge-based systems, promising to automate corporate expertise. But as clients discovered the high costs of knowledge engineering and the brittleness of applications, revenues dwindled. By the end of the decade, the market cooled dramatically, leaving investors wary of AI promises.

These “unicorns” of their day embodied the optimism and volatility of the AI boom. Their trajectories foreshadowed later cycles in dot-com and machine learning startups: dazzling valuations, spectacular claims, and eventual correction.

Culture, Hype, and the AI Imagination

The 1980s AI boom unfolded not only in laboratories and boardrooms but also in the cultural imagination. Films such as Blade Runner (1982) and The Terminator (1984) dramatized fears of intelligent machines surpassing human control. Media outlets speculated about job automation, intelligent weapons, and the dawning of a “thinking machine” era. Books with titles like Machines Who Think popularized AI for a mass audience.

This cultural climate amplified expectations: if Hollywood could conjure artificial persons, why not MIT or DARPA? The very ubiquity of AI in popular culture heightened the stakes of its inevitable disappointments. When expert systems failed to scale and Lisp machines proved costly, the disillusionment was correspondingly intense.

Conclusion

The artificial intelligence boom of the 1980s was a crucible of ambition, funding, and cultural projection. Expert systems promised to codify knowledge; governments poured resources into strategic projects; connectionists revived neural networks; roboticists embraced embodiment; fuzzy logic and Bayesian reasoning redefined uncertainty; and reinforcement learning gestured toward autonomous adaptation. Startups became unicorns before collapsing into bankruptcy.

Though the decade ended in an AI winter, its legacies remain foundational. Neural networks matured into deep learning, Bayesian reasoning undergirds modern probabilistic AI, and reinforcement learning powers today’s autonomous agents. Even the failures contributed: they taught caution in overpromising, humility in the face of complexity, and persistence in the pursuit of machine intelligence. The 1980s AI boom was thus not a failure but a necessary stage in the long and uneven history of artificial intelligence, a decade where the dream of the thinking machine burned brightly, even if too briefly.

Appendix

Notes

- Bruce G. Buchanan and Edward H. Shortliffe, Rule-Based Expert Systems: The MYCIN Experiments of the Stanford Heuristic Programming Project (Reading, MA: Addison-Wesley, 1984), 55–78.

- Randall Davis, “Expert Systems: Where Are We? And Where Do We Go from Here?” AI Magazine 3, no. 2 (1982): 3–22.

- Christopher Freeman and Luc Soete, The Economics of Industrial Innovation, 3rd ed. (Cambridge, MA: MIT Press, 1997), 369–372.

- David E. Rumelhart, Geoffrey E. Hinton, and Ronald J. Williams, “Learning Representations by Back-Propagating Errors,” Nature 323 (1986): 533–536.

- Rodney A. Brooks, “A Robust Layered Control System for a Mobile Robot,” IEEE Journal of Robotics and Automation 2, no. 1 (1985): 14–23.

- Klir, George J. and Bo Yuan, Fuzzy Sets, Fuzzy Logic, and Fuzzy Systems: Selected Papers by Lotfi A. Zadeh (New Jersey: World Scientific Pub Co Inc, 1996).

- Judea Pearl, Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference (San Mateo, CA: Morgan Kaufmann, 1988).

- Richard S. Sutton, “Learning to Predict by the Methods of Temporal Differences,” Machine Learning 3 (1988): 9–44.

- Paul Ceruzzi, A History of Modern Computing, 2nd ed. (Cambridge, MA: MIT Press, 1998), 255–257.

- W. Daniel Hillis, The Connection Machine (Cambridge, MA: MIT Press, 1985).

Bibliography

- Brooks, Rodney A. “A Robust Layered Control System for a Mobile Robot.” IEEE Journal of Robotics and Automation 2, no. 1 (1985): 14–23.

- Buchanan, Bruce G., and Edward H. Shortliffe. Rule-Based Expert Systems: The MYCIN Experiments of the Stanford Heuristic Programming Project. Reading, MA: Addison-Wesley, 1984.

- Ceruzzi, Paul. A History of Modern Computing. 2nd ed. Cambridge, MA: MIT Press, 1998.

- Davis, Randall. “Expert Systems: Where Are We? And Where Do We Go from Here?” AI Magazine 3, no. 2 (1982): 3–22.

- Freeman, Christopher, and Luc Soete. The Economics of Industrial Innovation. 3rd ed. Cambridge, MA: MIT Press, 1997.

- Hillis, W. Daniel. The Connection Machine. Cambridge, MA: MIT Press, 1985.

- Klir, George J. and Bo Yuan, Fuzzy Sets, Fuzzy Logic, and Fuzzy Systems: Selected Papers by Lotfi A. Zadeh. New Jersey: World Scientific Pub Co Inc, 1996.

- Pearl, Judea. Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference. San Mateo, CA: Morgan Kaufmann, 1988.

- Rumelhart, David E., Geoffrey E. Hinton, and Ronald J. Williams. “Learning Representations by Back-Propagating Errors.” Nature 323 (1986): 533–536.

- Sutton, Richard S. “Learning to Predict by the Methods of Temporal Differences.” Machine Learning 3 (1988): 9–44.

Originally published by Brewminate, 08.20.2025, under the terms of a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International license.