In his hands it becomes a tool of oppression.

By Matthew A. McIntosh

Public Historian

Brewminate

Introduction

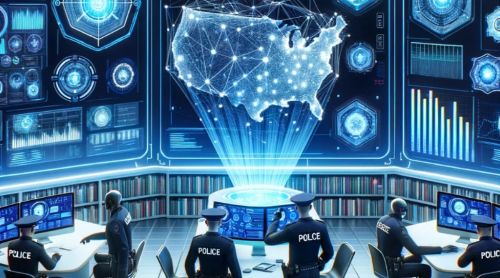

The growing integration of artificial intelligence (AI) into governance, security, and economic systems poses serious implications for democracy, privacy, and civil liberties. These concerns become especially pronounced when contemplating the return of Donald J. Trump to the presidency of the United States. A second Trump administration, informed by past behavior, ideological rhetoric, and authoritarian inclinations, could wield AI technologies in ways that threaten democratic institutions, civil rights, and global stability.

This article outlines the multifaceted risks associated with the use of AI under a potential Trump administration, drawing on documented actions from his previous term, current political movements, and emerging technological capabilities.

AI and Authoritarianism: A Natural Symbiosis

Artificial intelligence, while a powerful tool for efficiency and innovation, has an equally potent capacity for control and coercion. AI’s capabilities for surveillance, disinformation, predictive policing, and behavioral profiling are already being exploited by authoritarian regimes, most notably in China and Russia. These governments have used AI to identify dissidents, censor dissent, and manipulate public opinion at scale (Mozur, 2019; Soldatov & Borogan, 2022).

The Trump administration may replicate or adapt these models. Trump has expressed admiration for authoritarian leaders and has shown a tendency to blur the lines between government authority and personal loyalty (Rucker & Leonnig, 2020). His former tenure saw aggressive crackdowns on whistleblowers, journalists, and protestors, often involving technology-enabled surveillance (ACLU, 2020).

The concern is not simply hypothetical. The United States possesses AI technologies more advanced than those of many autocratic nations. In the wrong hands, such power could be turned inward, reshaping democratic structures into tools of domination.

Mass Surveillance and Civil Liberties

The Trump administration’s approach to surveillance raised alarm bells for civil liberties advocates during his first administration. For example, the 2020 protests following the killing of George Floyd were met with widespread surveillance by federal agencies using facial recognition, drones, and phone-tracking technology (The Guardian, 2020). AI-enhanced surveillance would significantly amplify these practices.

Trump and his allies have repeatedly targeted specific groups—including immigrants, Muslims, journalists, and political opponents. With AI, the potential for building automated systems that monitor, categorize, and penalize “undesirable” populations becomes dangerously feasible. Trump’s aggressive stance on immigration already resulted in databases and predictive models used to locate and deport undocumented individuals (ACLU, 2018). In a second term, these systems could be expanded and enhanced through AI.

The Department of Homeland Security under Trump in his first term considered proposals that would use AI to predict the likelihood of an immigrant becoming a “positively contributing member of society” (Noble, 2018). Such “predictive vetting” not only encodes bias but also opens the door to eugenics-era reasoning, leveraging algorithms to enforce ideological conformity. These efforts and ideas continue in the current administration.

Weaponization of Disinformation

AI tools like deepfakes and large language models can be employed to fabricate images, videos, and entire narratives. The use of such technologies in disinformation campaigns has already been documented in various geopolitical contexts (Chesney & Citron, 2019). A Trump-aligned media and political apparatus—already known for propagating falsehoods—could exploit generative AI to amplify misinformation campaigns to an unprecedented degree.

During his first term, Trump repeatedly labeled factual reporting as “fake news,” attacked journalists, and spread conspiracy theories—actions that eroded public trust in information itself. With AI-generated content, a second Trump administration would be uniquely equipped to flood the information space with propaganda that blurs the line between reality and fabrication.

The implications are dire: AI-powered bots could overwhelm social media, deepfakes could be used to discredit political opponents or incite violence, and algorithmic curation could reinforce ideological echo chambers, radicalizing segments of the population.

Predictive Policing and Racial Bias

AI is increasingly used in predictive policing, parole decisions, and sentencing. These systems have been widely criticized for perpetuating racial and socioeconomic bias (Angwin et al., 2016). Trump’s law-and-order rhetoric, combined with a historical willingness to overlook systemic racism, could lead to an aggressive expansion of these tools.

During the 2020 protests, Trump deployed federal agents in unmarked vehicles to detain protestors, referring to urban unrest in racialized terms. If predictive policing tools were employed under his directive, they could reinforce pre-existing racial profiling while being shielded by the presumed objectivity of algorithms.

Moreover, AI systems used in the criminal justice system often lack transparency. With little federal oversight and a politicized Department of Justice, a Trump administration could further obscure these processes, making it harder for civil rights groups to challenge unjust outcomes.

AI in Immigration Control and Border Enforcement

The Trump administration’s immigration policies are defined by harsh enforcement and high-tech surveillance. Programs like “Extreme Vetting” and CBP’s use of facial recognition at border crossings illustrate a broader strategy of using emerging technologies to police borders (Electronic Frontier Foundation, 2020).

AI would make these efforts more efficient and pervasive. Computer vision systems could be used to track and identify migrants in real time, drones equipped with machine learning could monitor vast stretches of the southern border, and predictive analytics could be used to prioritize enforcement targets. These capabilities, combined with Trump’s political goals, risk turning the southern border into a dystopian surveillance state.

Undermining Democratic Processes

Perhaps the most existential danger lies in AI’s potential to subvert democratic elections. Deepfake videos could be used to discredit candidates or incite violence. Chatbots powered by AI could engage in voter suppression campaigns, spreading misinformation about polling locations or voter eligibility.

Trump’s refusal to accept the 2020 election results, culminating in the January 6th insurrection, demonstrated a willingness to dismantle democratic norms when they conflict with personal ambition. His second term has seen the appointment of loyalists to key agencies like the Department of Justice and Federal Election Commission—actors who might enable or ignore abuses of AI in the electoral process.

AI tools could also be used to gerrymander electoral maps, microtarget voters with tailored propaganda, and suppress turnout in opposition communities. The very fabric of democratic participation could be manipulated by invisible code executing the will of a demagogue.

Lack of Oversight and Regulatory Capture

One of the most pressing concerns about AI is the lack of regulatory infrastructure. The Trump administration’s deregulatory stance and hostility toward science and expert agencies will further weaken efforts to responsibly govern AI.

Trump’s past and current appointments—such as putting industry lobbyists in charge of environmental policy and defunding scientific research—suggest a pattern of regulatory capture. In such an environment, corporate actors aligned with the administration could steer AI development toward profit and control, rather than ethics and transparency.

In contrast, the European Union is actively pursuing the AI Act, a comprehensive regulatory framework. The U.S., however, remains far behind. A Trump return would likely delay or dismantle the little progress made under the Biden administration, leaving the most powerful technology in human history largely ungoverned.

Conclusion

Artificial intelligence has the potential to revolutionize human society. But in the hands of a leadership that disrespects democratic norms, scapegoats vulnerable populations, and thrives on disinformation, it becomes a tool not of liberation, but of oppression.

Trump has inherited a far more advanced AI infrastructure than existed during his first term—one capable of enabling systemic surveillance, algorithmic discrimination, and widespread manipulation of public opinion. Without strong checks and balances, the United States risks descending into a techno-authoritarian state under the veneer of populist nationalism.

The use of AI in government must be accompanied by robust ethical standards, transparency, and democratic accountability. In their absence, we may be witnessing not the rise of intelligent governance, but the beginning of algorithmic tyranny.

References

- Angwin, J., Larson, J., Mattu, S., & Kirchner, L. (2016). Machine Bias. ProPublica.

- American Civil Liberties Union (ACLU). (2018). ICE’s Risk Assessment Software is Biased Against Immigrants.

- ACLU. (2020). Government Surveillance During Black Lives Matter Protests.

- Chesney, R., & Citron, D. (2019). Deep Fakes: A Looming Challenge for Privacy, Democracy, and National Security. California Law Review, 107(6), 1753–1819.

- Electronic Frontier Foundation. (2020). Border Surveillance.

- Mozur, P. (2019). One Month, 500,000 Face Scans: How China Is Using A.I. to Profile a Minority. The New York Times.

- Noble, S. U. (2018). Algorithms of Oppression: How Search Engines Reinforce Racism. NYU Press.

- Rucker, P., & Leonnig, C. (2020). A Very Stable Genius: Donald J. Trump’s Testing of America. Penguin Press.

- Soldatov, A., & Borogan, I. (2022). The Compatriots: The Brutal and Chaotic History of Russia’s Exiles, Émigrés, and Agents Abroad. PublicAffairs.

- The Guardian. (2020). Federal agents deployed drones and aircraft over George Floyd protests.

Originally published by Brewminate, 05.26.2025, under the terms of a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International license.